开篇 本篇以aosp分支android-11.0.0_r25,kernel分支android-msm-wahoo-4.4-android11作为基础解析

我们在上一片文章Android源码分析 - Framework层的Binder(客户端篇) 中,分析了客户端是怎么向服务端通过binder驱动发起请求,然后再接收服务端的返回的。本篇文章,我们将会以服务端的视角,分析服务端是怎么通过binder驱动接收客户端的请求,处理,然后再返回给客户端的。

ServiceManager 上篇文章我们是以ServiceManager作为服务端分析的,本篇文章我们还是围绕着它来做分析,它也是一个比较特殊的服务端,我们正好可以顺便分析一下它是怎么成为binder驱动的context_manager的

进程启动 ServiceManager是在独立的进程中运行的,它是由init进程从rc文件中解析并启动的,路径为frameworks/native/cmds/servicemanager/servicemanager.rc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 service servicemanager /system/bin/servicemanager class core animation user system group system readproc critical onrestart restart healthd onrestart restart zygote onrestart restart audioserver onrestart restart media onrestart restart surfaceflinger onrestart restart inputflinger onrestart restart drm onrestart restart cameraserver onrestart restart keystore onrestart restart gatekeeperd onrestart restart thermalservice writepid /dev/cpuset/system-background/tasks shutdown critical

这个服务的入口函数位于frameworks/native/cmds/servicemanager/main.cpp的main函数中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 int main (int argc, char ** argv) if (argc > 2 ) { LOG(FATAL) << "usage: " << argv[0 ] << " [binder driver]" ; } const char * driver = argc == 2 ? argv[1 ] : "/dev/binder" ; sp<ProcessState> ps = ProcessState::initWithDriver(driver); ps->setThreadPoolMaxThreadCount(0 ); ps->setCallRestriction(ProcessState::CallRestriction::FATAL_IF_NOT_ONEWAY); sp<ServiceManager> manager = new ServiceManager(std ::make_unique<Access>()); if (!manager->addService("manager" , manager, false , IServiceManager::DUMP_FLAG_PRIORITY_DEFAULT).isOk()) { LOG(ERROR) << "Could not self register servicemanager" ; } IPCThreadState::self()->setTheContextObject(manager); ps->becomeContextManager(nullptr , nullptr ); sp<Looper> looper = Looper::prepare(false ); BinderCallback::setupTo(looper); ClientCallbackCallback::setupTo(looper, manager); while (true ) { looper->pollAll(-1 ); } return EXIT_FAILURE; }

初始化Binder 首先读取参数,按照之前的rc文件来看,这里的driver为/dev/binder,然后根据此driver初始化此进程的ProcessState单例,根据我们上一章的分析我们知道此时会执行binder_open和binder_mmap,接着对这个单例做一些配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 sp<ProcessState> ProcessState::initWithDriver (const char * driver) Mutex::Autolock _l(gProcessMutex); if (gProcess != nullptr ) { if (!strcmp (gProcess->getDriverName().c_str(), driver)) { return gProcess; } LOG_ALWAYS_FATAL("ProcessState was already initialized." ); } if (access(driver, R_OK) == -1 ) { ALOGE("Binder driver %s is unavailable. Using /dev/binder instead." , driver); driver = "/dev/binder" ; } gProcess = new ProcessState(driver); return gProcess; }

access 文档:https://man7.org/linux/man-pages/man2/access.2.html

原型:int access(const char *pathname, int mode);

这个函数是用来检查调用进程是否可以对指定文件执行某种操作的,成功返回0,失败返回-1并设置error

mode参数可以为以下几个值:

F_OK:文件存在

R_OK:文件可读

W_OK:文件可写

X_OK:文件可执行

注册成为Binder驱动的context manager 接着调用了ProcessState的becomeContextManager函数注册成为Binder驱动的context manager

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 bool ProcessState::becomeContextManager () AutoMutex _l(mLock); flat_binder_object obj { .flags = FLAT_BINDER_FLAG_TXN_SECURITY_CTX, }; int result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR_EXT, &obj); if (result != 0 ) { android_errorWriteLog(0x534e4554 , "121035042" ); int unused = 0 ; result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR, &unused); } if (result == -1 ) { ALOGE("Binder ioctl to become context manager failed: %s\n" , strerror(errno)); } return result == 0 ; }

这里通过binder_ioctl,以BINDER_SET_CONTEXT_MGR_EXT为命令码请求binder驱动

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 static long binder_ioctl (struct file *filp, unsigned int cmd, unsigned long arg) ... switch (cmd) { ... case BINDER_SET_CONTEXT_MGR_EXT: { struct flat_binder_object fbo ; if (copy_from_user(&fbo, ubuf, sizeof (fbo))) { ret = -EINVAL; goto err; } ret = binder_ioctl_set_ctx_mgr(filp, &fbo); if (ret) goto err; break ; } ...

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 static int binder_ioctl_set_ctx_mgr (struct file *filp, struct flat_binder_object *fbo) int ret = 0 ; struct binder_proc *proc = filp ->private_data ; struct binder_context *context = proc ->context ; struct binder_node *new_node ; kuid_t curr_euid = current_euid(); mutex_lock(&context->context_mgr_node_lock); if (context->binder_context_mgr_node) { pr_err("BINDER_SET_CONTEXT_MGR already set\n" ); ret = -EBUSY; goto out; } ret = security_binder_set_context_mgr(proc->tsk); if (ret < 0 ) goto out; if (uid_valid(context->binder_context_mgr_uid)) { if (!uid_eq(context->binder_context_mgr_uid, curr_euid)) { pr_err("BINDER_SET_CONTEXT_MGR bad uid %d != %d\n" , from_kuid(&init_user_ns, curr_euid), from_kuid(&init_user_ns, context->binder_context_mgr_uid)); ret = -EPERM; goto out; } } else { context->binder_context_mgr_uid = curr_euid; } new_node = binder_new_node(proc, fbo); if (!new_node) { ret = -ENOMEM; goto out; } binder_node_lock(new_node); new_node->local_weak_refs++; new_node->local_strong_refs++; new_node->has_strong_ref = 1 ; new_node->has_weak_ref = 1 ; context->binder_context_mgr_node = new_node; binder_node_unlock(new_node); binder_put_node(new_node); out: mutex_unlock(&context->context_mgr_node_lock); return ret; }

这里的过程也很简单,首先检查之前是否设置过context manager,然后做权限校验,通过后通过binder_new_node创建出一个新的binder节点,并将它作为context manager节点

Looper循环处理Binder事务 这里的Looper和我们平常应用开发所说的Looper是一个东西,本篇就不做过多详解了,只需要知道,可以通过Looper::addFd函数监听文件描述符,通过Looper::pollAll或Looper::pollOnce函数接收消息,消息抵达后会回调LooperCallback::handleEvent函数

了解了这些后我们来看一下BinderCallback这个类

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 class BinderCallback :public LooperCallback {public : static sp<BinderCallback> setupTo (const sp<Looper>& looper) sp<BinderCallback> cb = new BinderCallback; int binder_fd = -1 ; IPCThreadState::self()->setupPolling(&binder_fd); LOG_ALWAYS_FATAL_IF(binder_fd < 0 , "Failed to setupPolling: %d" , binder_fd); IPCThreadState::self()->flushCommands(); int ret = looper->addFd(binder_fd, Looper::POLL_CALLBACK, Looper::EVENT_INPUT, cb, nullptr ); LOG_ALWAYS_FATAL_IF(ret != 1 , "Failed to add binder FD to Looper" ); return cb; } int handleEvent (int , int , void * ) override IPCThreadState::self()->handlePolledCommands(); return 1 ; } };

在servicemanager进程启动的过程中调用了BinderCallback::setupTo函数,这个函数首先想binder驱动发起了一个BC_ENTER_LOOPER事务请求,获得binder设备的文件描述符,然后调用Looper::addFd函数监听binder设备文件描述符,这样当binder驱动发来消息后,就可以通过Looper::handleEvent函数接收并处理了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 status_t IPCThreadState::setupPolling (int * fd) if (mProcess->mDriverFD < 0 ) { return -EBADF; } mOut.writeInt32(BC_ENTER_LOOPER); flushCommands(); *fd = mProcess->mDriverFD; pthread_mutex_lock(&mProcess->mThreadCountLock); mProcess->mCurrentThreads++; pthread_mutex_unlock(&mProcess->mThreadCountLock); return 0 ; }

Binder事务处理 BinderCallback类重写了handleEvent函数,里面调用了IPCThreadState::handlePolledCommands函数来接收处理binder事务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 status_t IPCThreadState::handlePolledCommands () status_t result; do { result = getAndExecuteCommand(); } while (mIn.dataPosition() < mIn.dataSize()); processPendingDerefs(); flushCommands(); return result; }

读取并处理响应 这个函数的重点在getAndExecuteCommand,首先无论如何从binder驱动那里读取并处理一次响应,如果处理完后发现读缓存中还有数据尚未消费完,继续循环这个处理过程(理论来说此时不会再从binder驱动那里读写数据,只会处理剩余读缓存)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 status_t IPCThreadState::getAndExecuteCommand () status_t result; int32_t cmd; result = talkWithDriver(); if (result >= NO_ERROR) { size_t IN = mIn.dataAvail(); if (IN < sizeof (int32_t )) return result; cmd = mIn.readInt32(); ... result = executeCommand(cmd); ... } return result; }

处理响应 这里有很多线程等其他操作,我们不需要关心,我在这里把他们简化掉了,剩余的代码很清晰,首先从binder驱动中读取数据,然后从数据中读取出BR响应码,接着调用executeCommand函数继续往下处理

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 status_t IPCThreadState::executeCommand (int32_t cmd) BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t )cmd) { ... case BR_TRANSACTION_SEC_CTX: case BR_TRANSACTION: { binder_transaction_data_secctx tr_secctx; binder_transaction_data& tr = tr_secctx.transaction_data; if (cmd == (int ) BR_TRANSACTION_SEC_CTX) { result = mIn.read(&tr_secctx, sizeof (tr_secctx)); } else { result = mIn.read(&tr, sizeof (tr)); tr_secctx.secctx = 0 ; } ALOG_ASSERT(result == NO_ERROR, "Not enough command data for brTRANSACTION" ); if (result != NO_ERROR) break ; Parcel buffer; buffer.ipcSetDataReference( reinterpret_cast <const uint8_t *>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast <const binder_size_t *>(tr.data.ptr.offsets), tr.offsets_size/sizeof (binder_size_t ), freeBuffer, this ); ... Parcel reply; status_t error; if (tr.target.ptr) { if (reinterpret_cast <RefBase::weakref_type*>( tr.target.ptr)->attemptIncStrong(this )) { error = reinterpret_cast <BBinder*>(tr.cookie)->transact(tr.code, buffer, &reply, tr.flags); reinterpret_cast <BBinder*>(tr.cookie)->decStrong(this ); } else { error = UNKNOWN_TRANSACTION; } } else { error = the_context_object->transact(tr.code, buffer, &reply, tr.flags); } if ((tr.flags & TF_ONE_WAY) == 0 ) { LOG_ONEWAY("Sending reply to %d!" , mCallingPid); if (error < NO_ERROR) reply.setError(error); sendReply(reply, 0 ); } else { ... } ... } break ; ... } if (result != NO_ERROR) { mLastError = result; } return result; }

我们重点分析这个函数在BR_TRANSACTION下的case,其余的都删减掉

首先,这个函数从读缓存中读取了binder_transaction_data,我们知道这个结构体记录了实际数据的地址、大小等信息,然后实例化了一个Parcel对象作为缓冲区,从binder_transaction_data中将实际数据读取出来

接着找到本地BBinder对象,对于ServiceManager来说就是之前在main函数中setTheContextObject的ServiceManager对象,而对于其他binder服务端来说,则是通过tr.cookie获取,然后调用BBinder的transact函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 status_t BBinder::transact ( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) data.setDataPosition(0 ); if (reply != nullptr && (flags & FLAG_CLEAR_BUF)) { reply->markSensitive(); } status_t err = NO_ERROR; switch (code) { ... default : err = onTransact(code, data, reply, flags); break ; } if (reply != nullptr ) { reply->setDataPosition(0 ); if (reply->dataSize() > LOG_REPLIES_OVER_SIZE) { ALOGW("Large reply transaction of %zu bytes, interface descriptor %s, code %d" , reply->dataSize(), String8(getInterfaceDescriptor()).c_str(), code); } } return err; }

onTransact 这个函数主要调用了onTransact函数,它是一个虚函数,可以被子类重写。我们观察ServiceManager这个类,它继承了BnServiceManager,在BnServiceManager中重写了这个onTransact函数,它们的继承关系如下:

ServiceManager -> BnServiceManager -> BnInterface<IServiceManager> -> IServiceManager & BBinder

这里的BnServiceManager是通过AIDL工具生成出来的(AIDL既可以生成Java代码,也可以生成C++代码),我们找到一份生成后的代码

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 ::android::status_t BnServiceManager::onTransact (uint32_t _aidl_code, const ::android::Parcel& _aidl_data, ::android::Parcel* _aidl_reply, uint32_t _aidl_flags) { ::android::status_t _aidl_ret_status = ::android::OK; switch (_aidl_code) { case BnServiceManager::TRANSACTION_getService: { ::std ::string in_name; ::android::sp<::android::IBinder> _aidl_return; if (!(_aidl_data.checkInterface(this ))) { _aidl_ret_status = ::android::BAD_TYPE; break ; } _aidl_ret_status = _aidl_data.readUtf8FromUtf16(&in_name); if (((_aidl_ret_status) != (::android::OK))) { break ; } if (auto st = _aidl_data.enforceNoDataAvail(); !st.isOk()) { _aidl_ret_status = st.writeToParcel(_aidl_reply); break ; } ::android::binder::Status _aidl_status(getService(in_name, &_aidl_return)); _aidl_ret_status = _aidl_status.writeToParcel(_aidl_reply); if (((_aidl_ret_status) != (::android::OK))) { break ; } if (!_aidl_status.isOk()) { break ; } _aidl_ret_status = _aidl_reply->writeStrongBinder(_aidl_return); if (((_aidl_ret_status) != (::android::OK))) { break ; } } break ; ... } if (_aidl_ret_status == ::android::UNEXPECTED_NULL) { _aidl_ret_status = ::android::binder::Status::fromExceptionCode(::android::binder::Status::EX_NULL_POINTER).writeOverParcel(_aidl_reply); } return _aidl_ret_status; }

生成出的代码格式比较丑,不易阅读,我把它格式化了一下,提取出我们需要的部分。这个函数主要流程就是先从data中读取所需要的参数,然后根据参数执行相对应的函数,然后将状态值写入reply,最后再将返回值写入reply。这里我们将上一章节AIDL生成出的java文件那部分拿过来做对比,我们可以发现,这里Parcel的写入和那里Parcel的读取顺序是严格一一对应的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 @Override public android.os.IBinder getService (java.lang.String name) throws android.os.RemoteException android.os.Parcel _data = android.os.Parcel.obtain(); android.os.Parcel _reply = android.os.Parcel.obtain(); android.os.IBinder _result; try { _data.writeInterfaceToken(DESCRIPTOR); _data.writeString(name); boolean _status = mRemote.transact(Stub.TRANSACTION_getService, _data, _reply, 0 ); _reply.readException(); _result = _reply.readStrongBinder(); } finally { _reply.recycle(); _data.recycle(); } return _result; }

实际功能实现 然后我们来看真正功能实现的地方:getService函数,根据之前所说的继承关系,ServiceManager继承自IServiceManager,实现了其中的纯虚函数,其中就包括了getService

1 2 3 4 5 Status ServiceManager::getService (const std ::string & name, sp<IBinder>* outBinder) { *outBinder = tryGetService(name, true ); return Status::ok(); }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 sp<IBinder> ServiceManager::tryGetService (const std ::string & name, bool startIfNotFound) { auto ctx = mAccess->getCallingContext(); sp<IBinder> out; Service* service = nullptr ; if (auto it = mNameToService.find(name); it != mNameToService.end()) { service = &(it->second); if (!service->allowIsolated) { uid_t appid = multiuser_get_app_id(ctx.uid); bool isIsolated = appid >= AID_ISOLATED_START && appid <= AID_ISOLATED_END; if (isIsolated) { return nullptr ; } } out = service->binder; } if (!mAccess->canFind(ctx, name)) { return nullptr ; } if (!out && startIfNotFound) { tryStartService(name); } if (out) { service->guaranteeClient = true ; } return out; }

这里面的实现我们就没必要细看了,只需要注意它返回了相应service的binder对象,根据上面的代码来看,会将其写入到reply中

Reply 实际的功能处理完成后,我们回到IPCThreadState::executeCommand中来。对于非TF_ONE_WAY模式,我们要将reply发送给请求方客户端

1 2 3 4 5 6 7 8 9 10 status_t IPCThreadState::sendReply (const Parcel& reply, uint32_t flags) status_t err; status_t statusBuffer; err = writeTransactionData(BC_REPLY, flags, -1 , 0 , reply, &statusBuffer); if (err < NO_ERROR) return err; return waitForResponse(nullptr , nullptr ); }

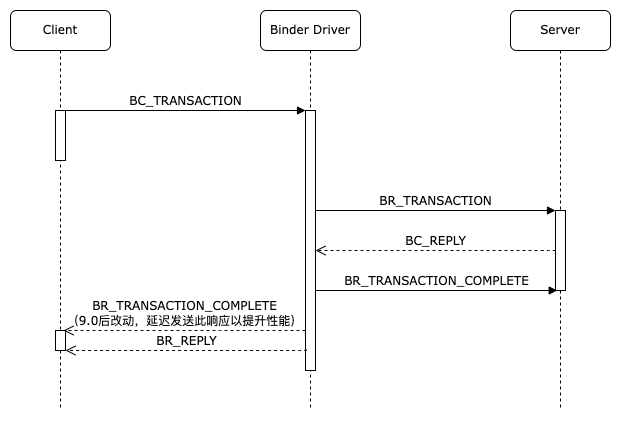

writeTransactionData在上一章中已经分析过了,这里就不多做描述了,waitForResponse我们上一章也分析过了,根据我们在上一章所描述的非TF_ONE_WAY的通信过程,在向binder驱动发送BC_REPLY请求后我们会收到BR_TRANSACTION_COMPLETE响应,根据我们传入waitForResponse的两个参数值,会直接跳出函数中的循环,结束此次binder通信

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) uint32_t cmd; int32_t err; while (1 ) { if ((err=talkWithDriver()) < NO_ERROR) break ; err = mIn.errorCheck(); if (err < NO_ERROR) break ; if (mIn.dataAvail() == 0 ) continue ; cmd = (uint32_t )mIn.readInt32(); switch (cmd) { ... case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break ; ... } finish: if (err != NO_ERROR) { if (acquireResult) *acquireResult = err; if (reply) reply->setError(err); mLastError = err; logExtendedError(); } return err; }

至此,binder服务端的一次消息处理到这就结束了,Looper会持续监听着binder驱动fd,等待下一条binder消息的到来

结束 经过这么多篇文章的分析,整个Binder架构的大致通信原理、过程,我们应该都了解的差不多了,至于一些边边角角的细节,以后有机会的话我会慢慢再补充